Intel’s 10th gen Core i9-10900K is—without a doubt—exactly as Intel has described it: “the world’s fastest gaming CPU.”

Intel’s problem has been weaknesses outside of gaming, and its overall performance value compared to AMD’s Ryzen 3000 chips. With the Core i9-10900K, Intel doesn’t appear to be eliminating that gap, but it could get close enough that you might not care.

Intel

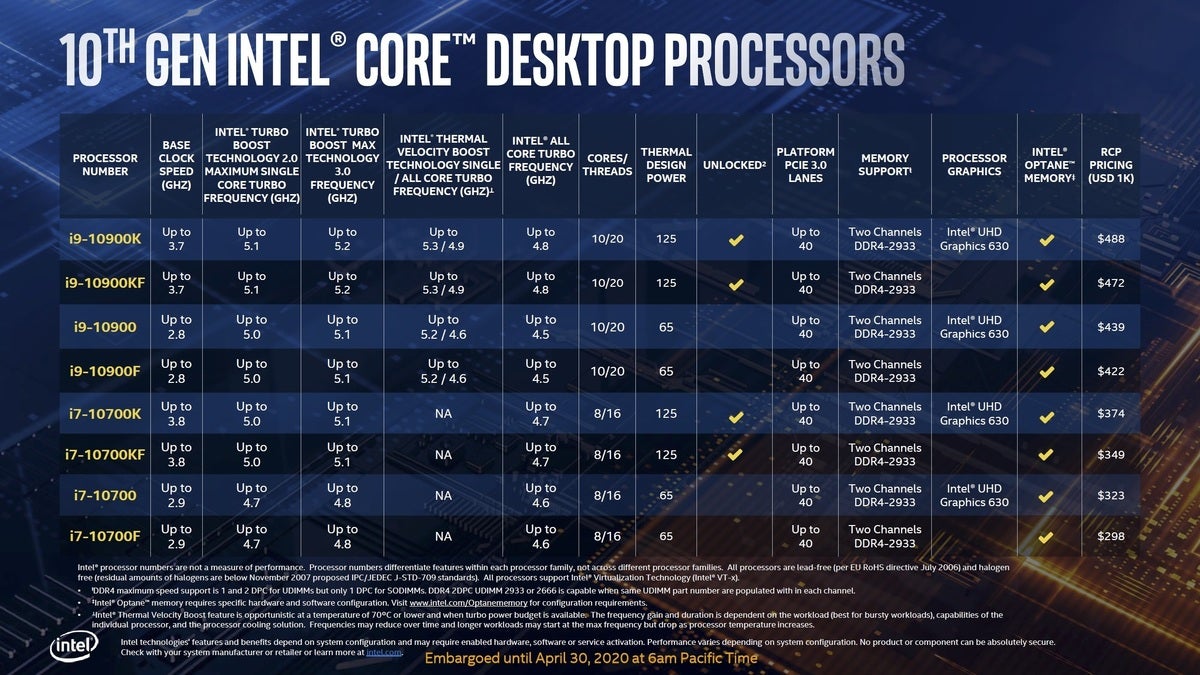

IntelWhat is Core i9-10900K?

Despite its 10th-gen naming, Intel’s newest desktop chips continue to be built on the company’s aging 14nm process. How old is it? It was first used with the 5th-gen Core i7-5775C desktop chip from 2015. Many tricks, optimizations, and much binning later, we have the flagship consumer Core i9-10900K, announced April 30. The CPU features 10 cores and Hyper-Threading for a total of 20 threads, with a list price of $488.

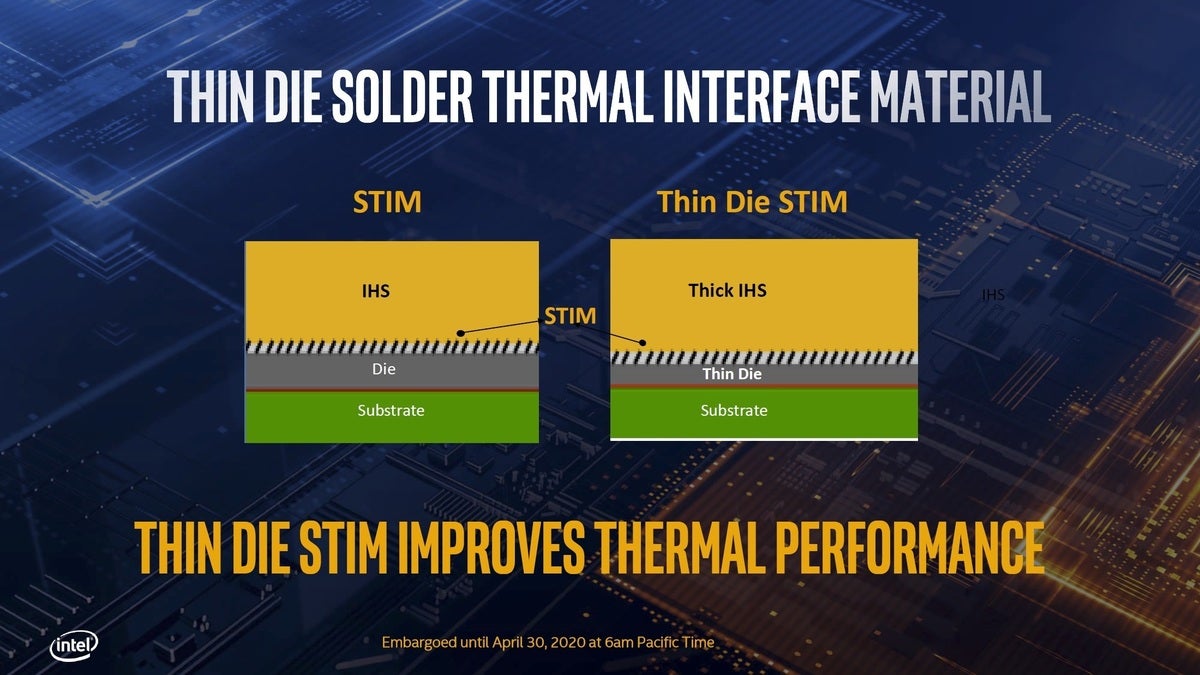

The Core i9-10900K does bring a few changes. Intel officials said the chip uses a thinner die and thinner solder thermal interface material (STIM) to improve thermal dissipation. Intel also had to make a thicker heat spreader (that little metal lid to keep you from crushing the delicate die).

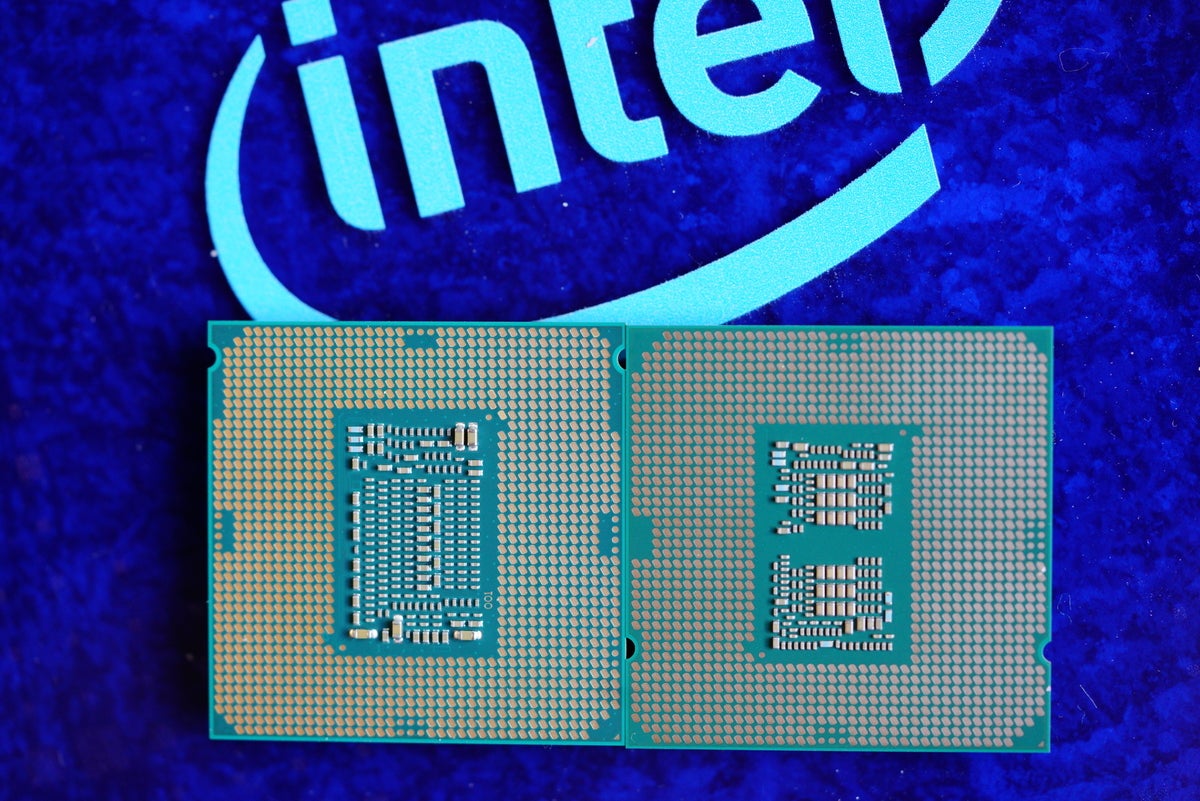

Gordon Mah Ung

Gordon Mah UngA 10th gen Core i9-10900K (right) next to an 8th-gen Core i7-8700K (left). There are subtle differences in the “wings” that the load plate clamps onto, and the notches in the substrate are on opposite corners.

Why make the die and STIM thinner, but the heat spreader thicker? The reason is cost. Intel said it had to keep the height of the heat spreader on all of its CPUs the same so they’d be compatible with existing cooling hardware. Intel officials did say the materials used for the heat spreader help compensate for that compromise, so overall the new chip is better at power dissipation.

Intel

IntelThe 10th-gen Comet Lake S CPUs feature a thinner die and thermal interface material, plus a thicker heat spreader to help improve heat dissipation.

A new socket?!

It’s true: Intel’s new 10th-gen CPUs bring with them a new LGA1200 socket that is—of course—incompatible with the previous 9th-gen CPUs. Intel took flack for introducing a new chipset with its 8th-gen desktop chips that was incompatible with the previous generation, so you can understand the anger for those who just want to upgrade the CPU, not also the motherboard.

Gordon Mah Ung

Gordon Mah UngThe backside of an LGA1151 8th-gen Core i7-8700K (left), and an LGA1200 10th-gen Core i7-10900K (right).

The LGA1200 socket and new Z490 don’t seem to change much. You still install the CPU almost the same way, and if you have an existing LGA1151 cooler, it’ll still fit. Sadly, rumors of PCIe 4.0 on the X490 proved untrue, leaving Intel at a disadvantage compared to Ryzen 3000 chips that have the faster interface for SSDs and GPUs.

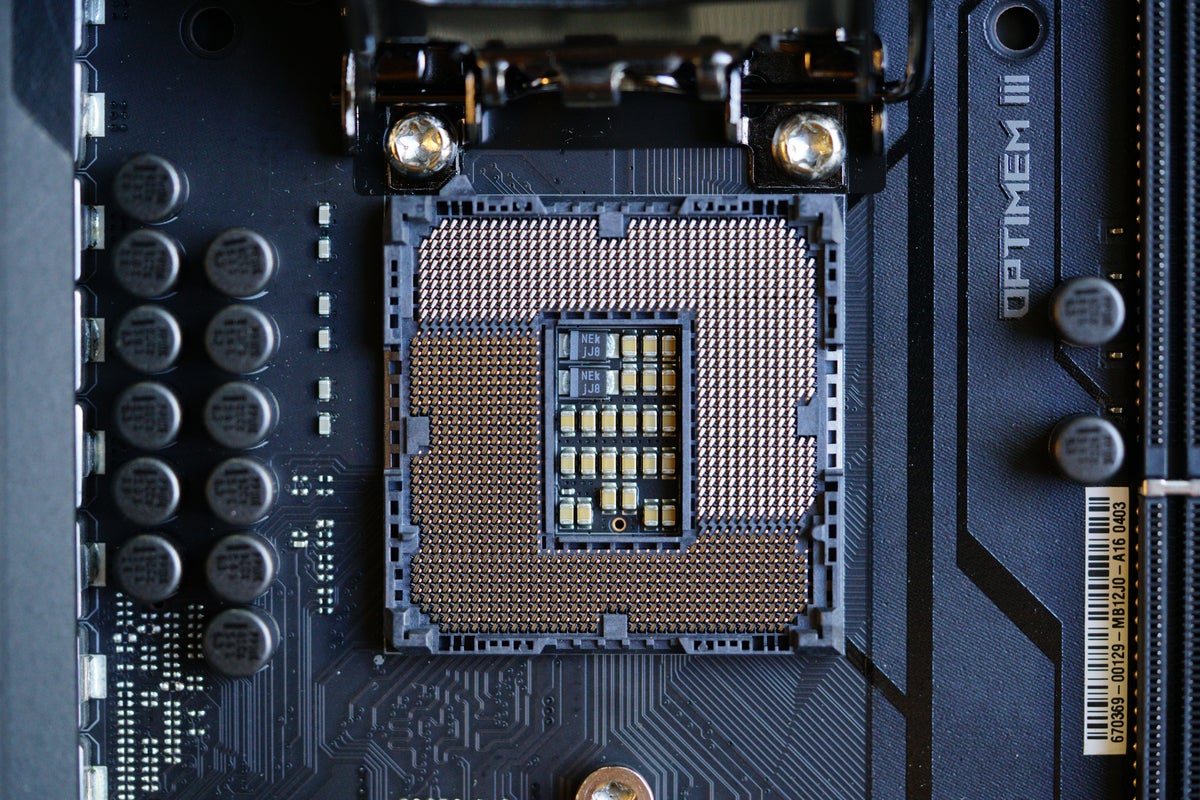

Gordon Mah Ung

Gordon Mah UngA new LGA1200 socket is required to run a 10th-gen Comet Lake S CPU.

How We Tested

For this review, we stick with Intel’s flagship, the $488 Core i9-10900K. Its natural competitor is AMD’s Ryzen 9 3900X with 12 cores and 24 threads. Its list price is $499, but its street price as of this writing is actually more like $410 on Amazon. The Ryzen 9 3900X comes with a decent air cooler, too.

The only other CPU we’d compare would be the Ryzen 9 3950X, but with a street price of $720 on Amazon (as of this writing) the math doesn’t work. So we’ll stick with the 12-core Ryzen 9 3900X. It was tested on an MSI X570 MEG Godlike with 16GB of DDR4/3600 in dual-channel mode. We typically use the same SSD on all platforms, but we feel that’s unfair to AMD, which can run PCIe 4, so we used a Corsair MP600 PCIe 4.0 drive.

For the Core i9-10900K, we used an Asus ROG Maximus VII Extreme board with 16GB of DDR4/3200 in dual-channel mode and a Samsung 960P SSD.

Both systems used Windows 10 1909, identical GeForce RTX 2080 Ti Founders Edition cards, and NZXT Kraken X62 coolers with fans set to 100 percent. Both boards were run open-case, with matching desk fans blowing cool air directly onto the graphics cards and the boards’ socket area. All systems used the same drivers, the latest UEFI’s, and the latest Windows security updates.

Due to time and other constraints, we ran the boards with MCE and PBO set to Auto, and 2nd-level XMP and AMP profiles selected. While these factory settings are beyond what is stock, we think it’s close to what a consumer see will see out of the box.

Gordon Mah Ung

Gordon Mah UngThe 10th-gen Core i9-10900K sits in the LGA1200 socket of an Asus ROG Maximus Extreme VII.

Core i9-10900K Rendering Performance

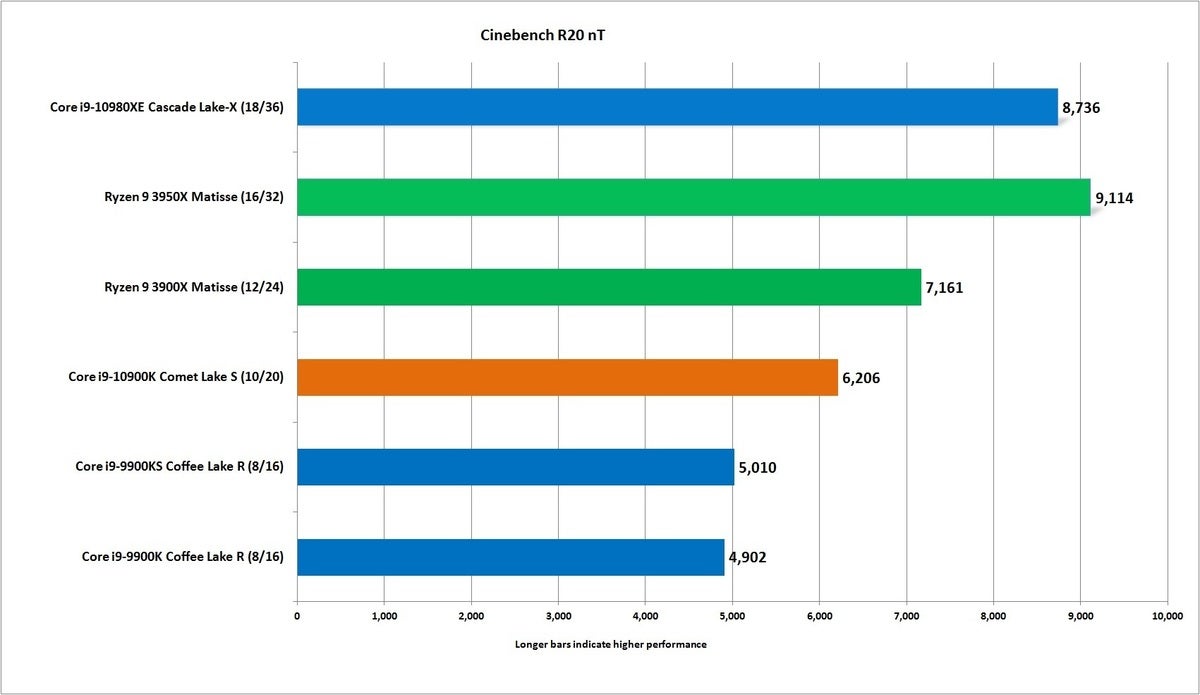

We’ll kick this off where we normally do: Maxon’s Cinebench R20. It’s a 3D modelling test built on the company’s Cinema4D engine, and it’s integrated into such products as Adobe After Effects. Like most 3D modelling apps, more cores and more threads typically yield more performance.

Our results for the Core i9-10900K and Ryzen 9 3900X are fresh, but we decided to sprinkle in results from previous reviews for more context. Although those older results are not using the latest version of Windows, Cinebench is very reliable. The R20 version uses AVX2 and AVX512 and takes about three times as long to run as the older R15 version. That means any boost performance should matter less.

Remember these results, because for the most part it won’t change too much as we move through multi-threaded performance: Cores matter. The Ryzen 9 3900X’s 12 cores prevail over the the Core i9-10900K’s 10. If it’s any consolation, the latest Core chip fares noticeably better than the Core i9-9900K and Core i9-9900KS, which were both handcuffed by their “mere” 8 cores.

IDG

IDGThe 10-core wasn’t expected to out gun AMD’s 12-core but at least it’s closer.

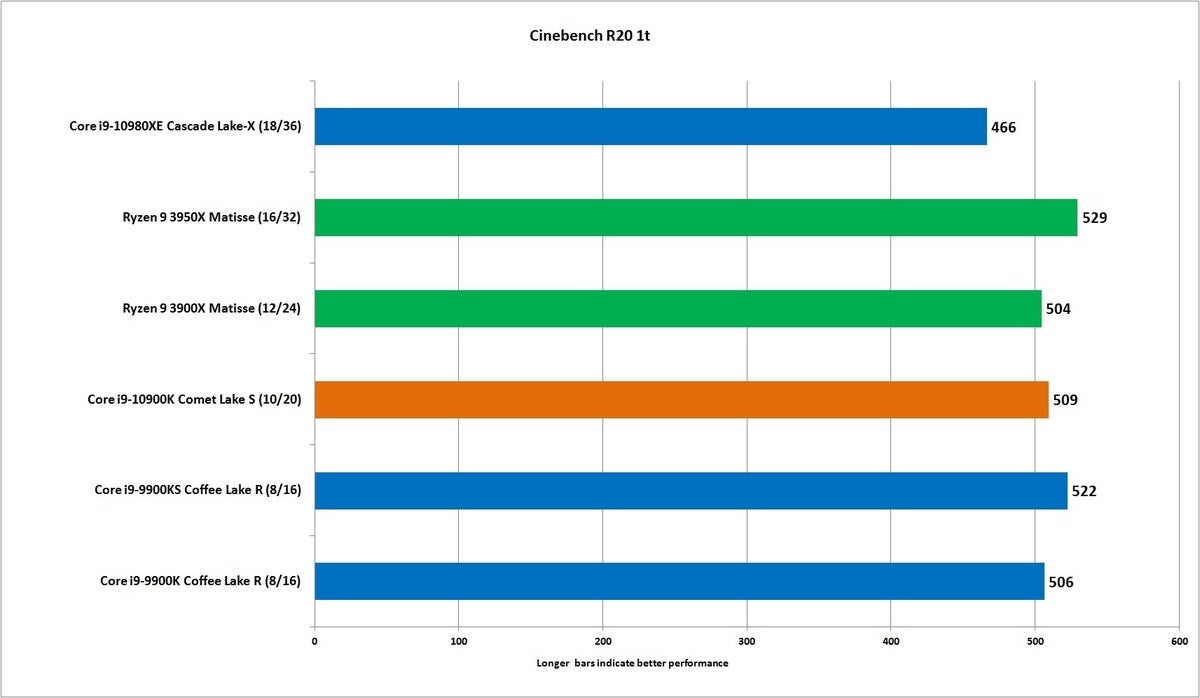

Switching Cinebench R20 to single-threaded performance, constrain the load to a single CPU core. The results are so close that no would or should care. We expected the Core i9-10900K to have a little more of an edge, but maybe it’s the luck of the draw.

IDG

IDGSingle-core performance among the consumer chips is pretty much dead-even.

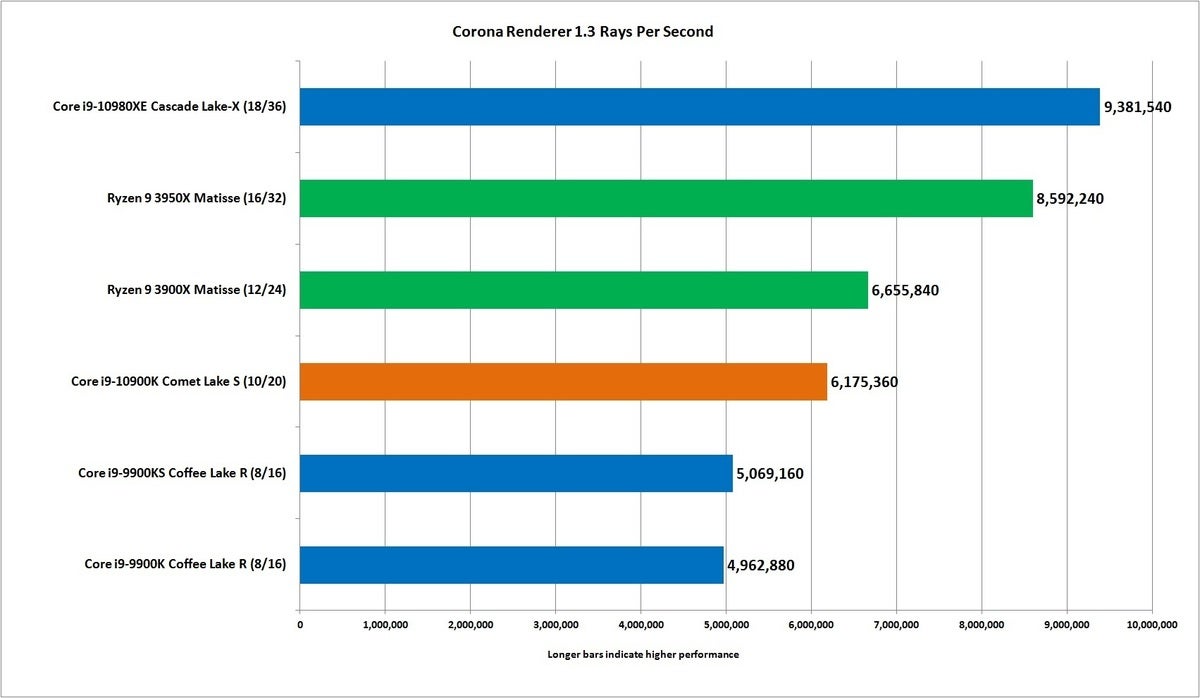

Corona is an unbiased photo-realistic 3D renderer, which means it takes no shortcuts in how it renders a scene. It loves cores and threads, so the results here follow the trend, but the Core i9 finishes just 7 percent shy of the Ryzen 9. In Cinebench R20, the Ryzen 9 had a larger 15-percent advantage.

IDG

IDGThe Corona renderer closes the gap close between the Ryzen 9 and Core i9 to about 7 percent.

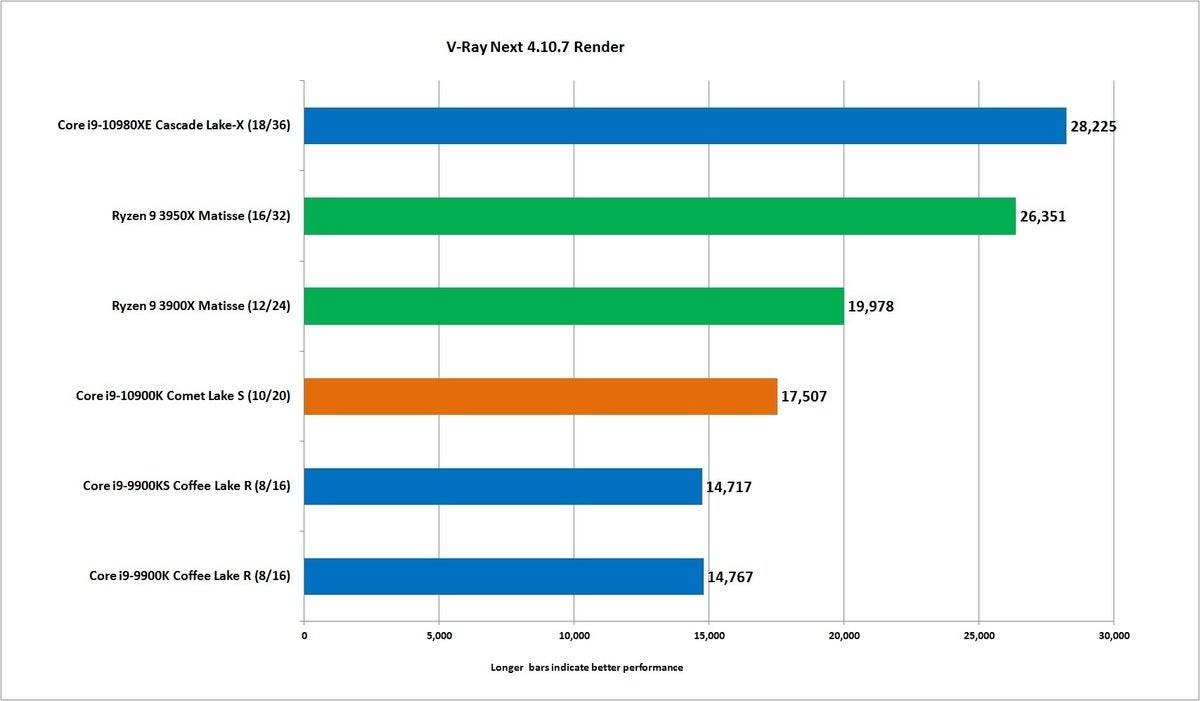

The Chaos Group’s V-Ray Next is like Cinema4D’s engine, and it’s a biased rendererer—it takes shortcuts to finish projects so you can, you know, win an Academy Award like the V-Ray has. It loves thread count, so guess what: The Ryzen 9 comes out about 14 percent faster than the Core i9.

IDG

IDGV-Ray is a biased renderer like Cinema4D and performance tracks closely with the Ryzen 9 about 14 percent faster than the newest Core i9.

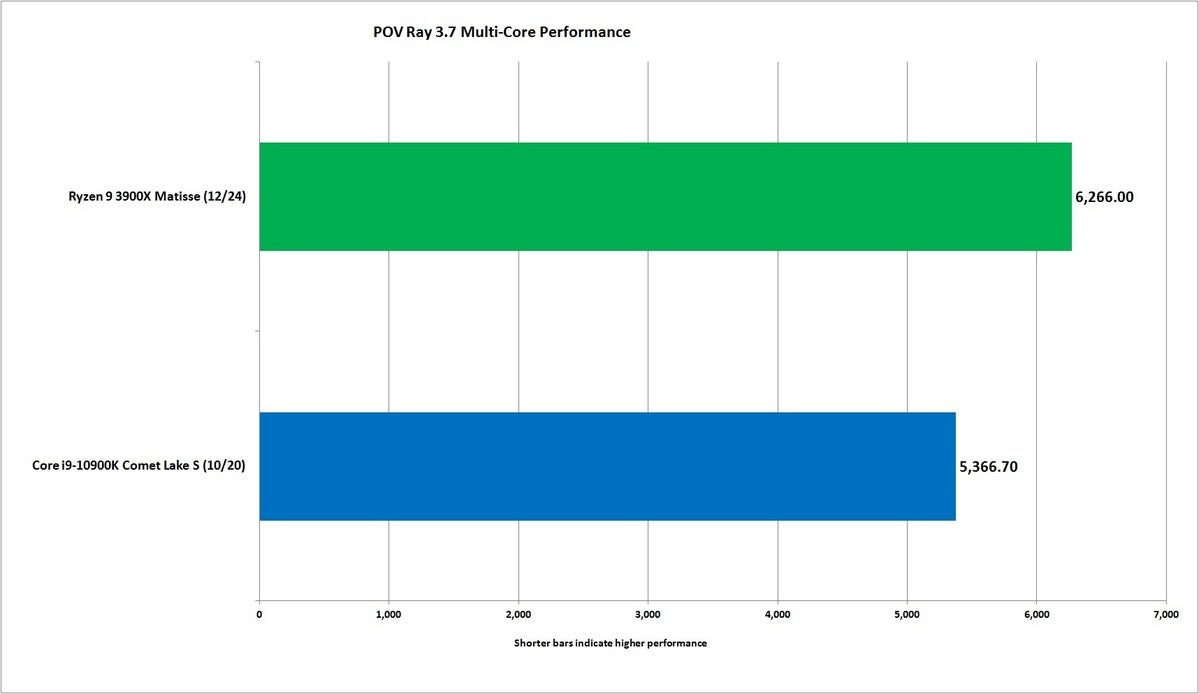

Our last rendering test measures ray tracing performance using the latest version of POV Ray. The Ryzen comes in almost 17 percent faster than the Core i9. That’s pretty close to the thread-count advantage the Ryzen 9 has, which equals 20 percent more.

IDG

IDGPOV Ray puts the Ryzen 9 ahead of the Core i9 by almost 17 percent.

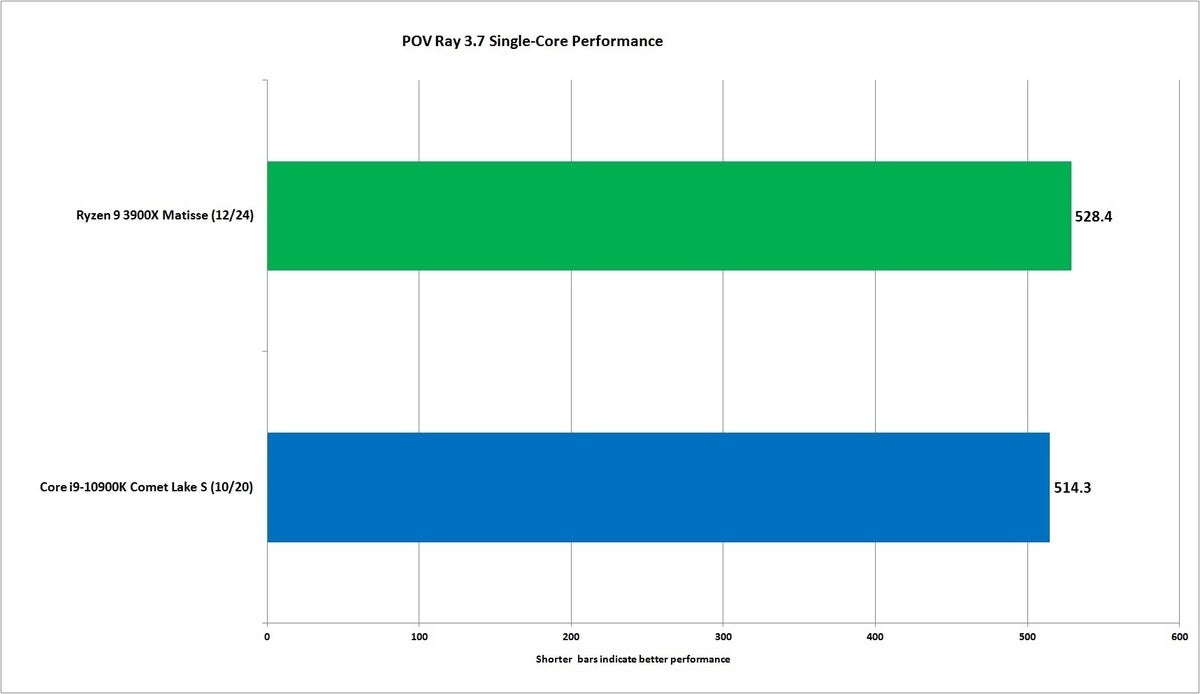

Switching POV Ray to single-threaded performance, the Ryzen 9 squeezes by the Core i9, which surprised us—we thought the Core i9 would take the lead.

IDG

IDGSingle-threaded performance of the Ryzen 9 is just under 3 percent faster than the Core i9.

Core i9-10900K Encoding Performance

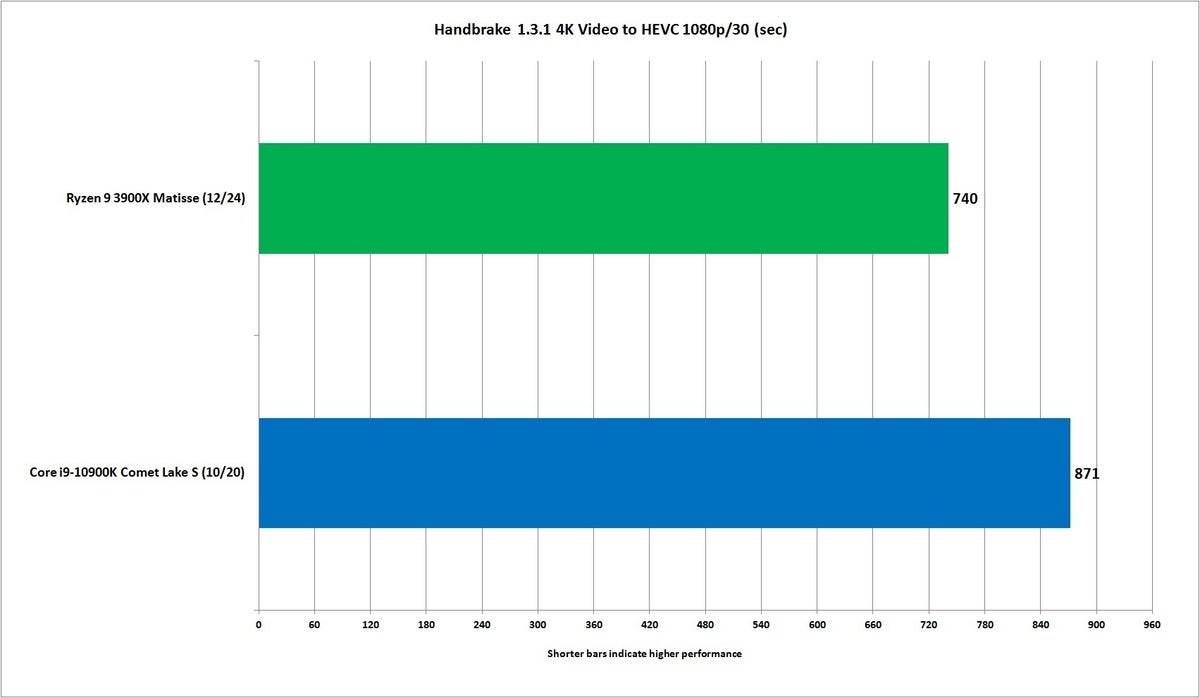

Video encoding needs fast CPUs, too. That’s why we use the latest version of HandBrake to convert a 4K video short to 1080p using H.265. Using the CPU to finish the task, the 12-core Ryzen 9 finished with a 16-percent advantage over the 10-core Core i9. So far, that’s pretty much everything we’ve expected.

IDG

IDGWe use Handbrake to transcode a 4K video using the HEVC/H.265 codec.

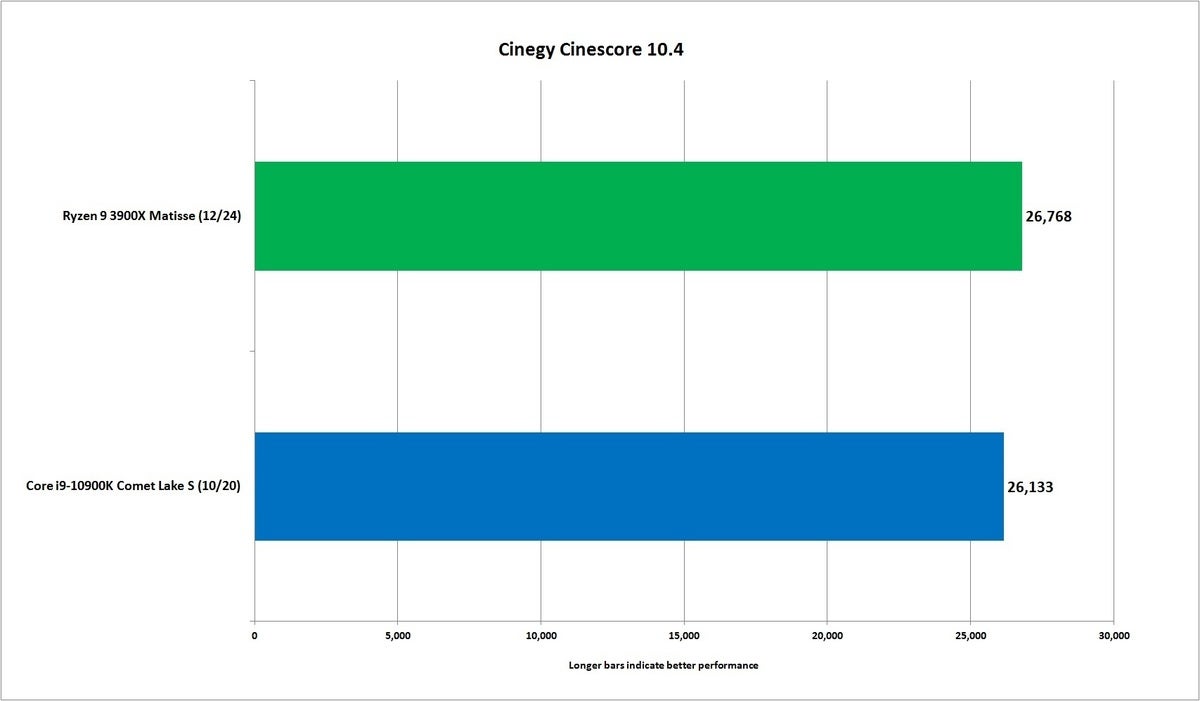

Our next test uses Cinegy’s Cinescore to assess CPU and GPU performance across several dozen broadcast industry profiles from SD to 8K, using codecs from H.264, to MPEG2, XDCAM and AVC Ultra as well as Nvidia H.265 and Daniel 2. It runs entirely in RAM to remove storage as a bottleneck. (Note: The version of Cinescore we use is older and no longer works without setting an older date on the PC—the version has timed out the codec license.)

While the Core i9 doesn’t win, it gets awfully close to the Ryzen 9. This could mean Cinescore and its CODECs don’t care that much about thread count, or the higher clock speeds of the Core i9 may be of more value. Sorry, Ryzen 9 fans.

IDG

IDGIt’s too close for comfort for the Ryzen 9: The Core i9 comes within 3 percent of its performance.

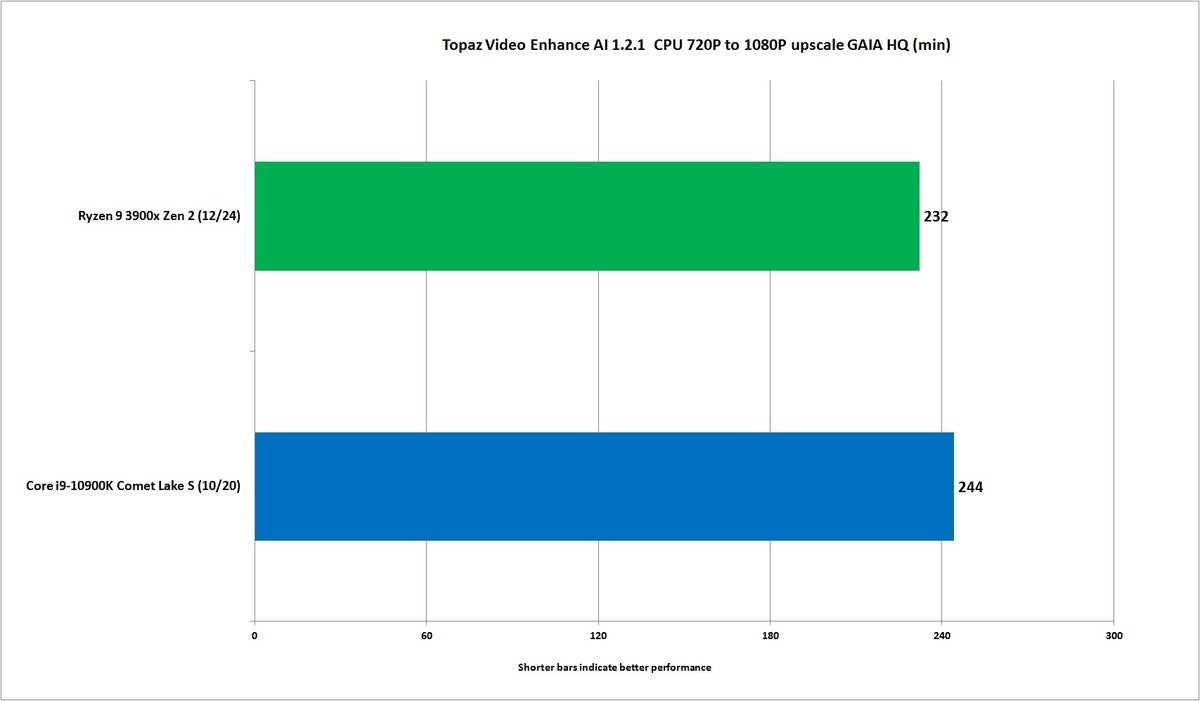

Our next test is a video test, but not in a traditional sense. While standard encoding or transcoding isn’t all that smart in how it downsamples or upsamples video, Topaz Lab’s Video Enhance AI claims to look at every frame and use machine learning inferencing to decide what will make each frame look better on the upscale, based on studying other videos. For the test, we take a two-minute family video shot on a Kodak video camera and upscale it from 720p to 1080p, using the app’s Gaia HQ preset.

This upscale would typically be done on a GPU, where it would be significantly faster, but we ordered it to use the CPU cores for the upscale. Topaz Labs uses Intel’s own OpenVINO for the Deep Learning. Doing a frame-by-frame upscale isn’t easy and still takes literally hours to complete. The Ryzen 9 finishes with about a five-percent advantage over the Core i9. Too close for comfort, but a win is a win.

IDG

IDGTopaz Lab’s Video Enhance AI uses machine learning to decide how to upscale video.

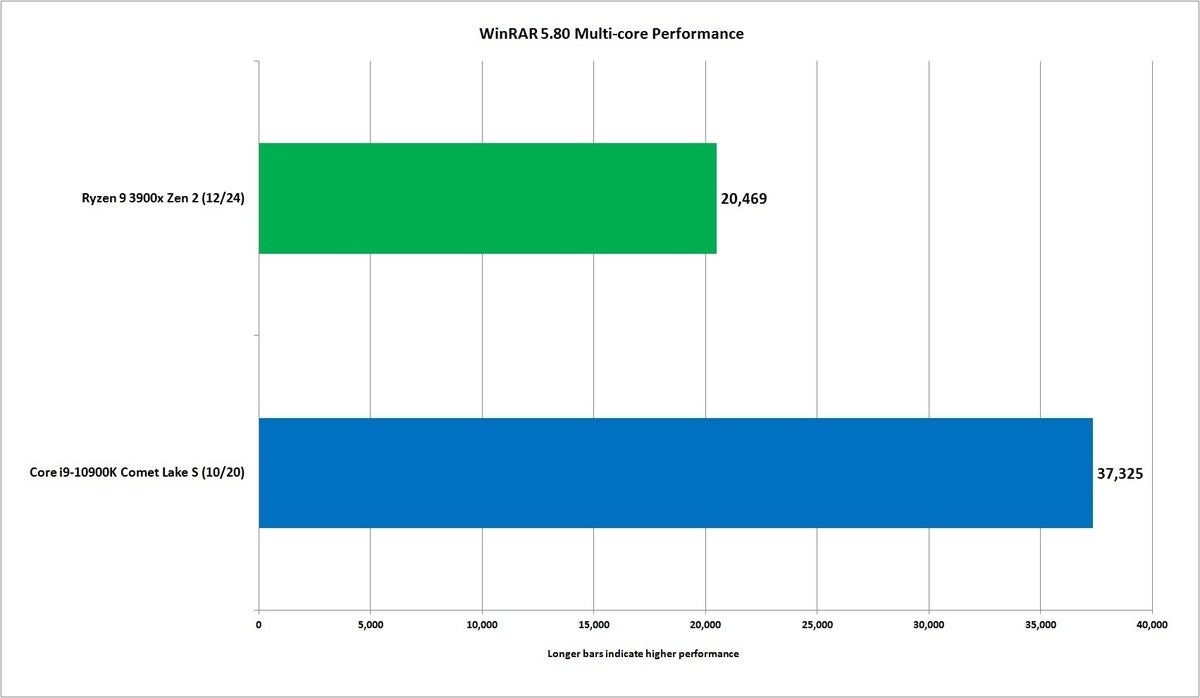

Core i9-10900K Compression Performance

Moving on to compression and decompression performance, we first use RARLab’s WinRAR. As with prior Ryzen CPUs, the result is bad—a loss for the Ryzen 9 and a big win for the Core i9. The Ryzen architecture has long performed poorly here. In the built-in benchmark, the Core i9 is 82 percent faster.

IDG

IDGNo surprise, the Core i9 walks away with a big win here because the Ryzen part just runs poorly in WinRAR.

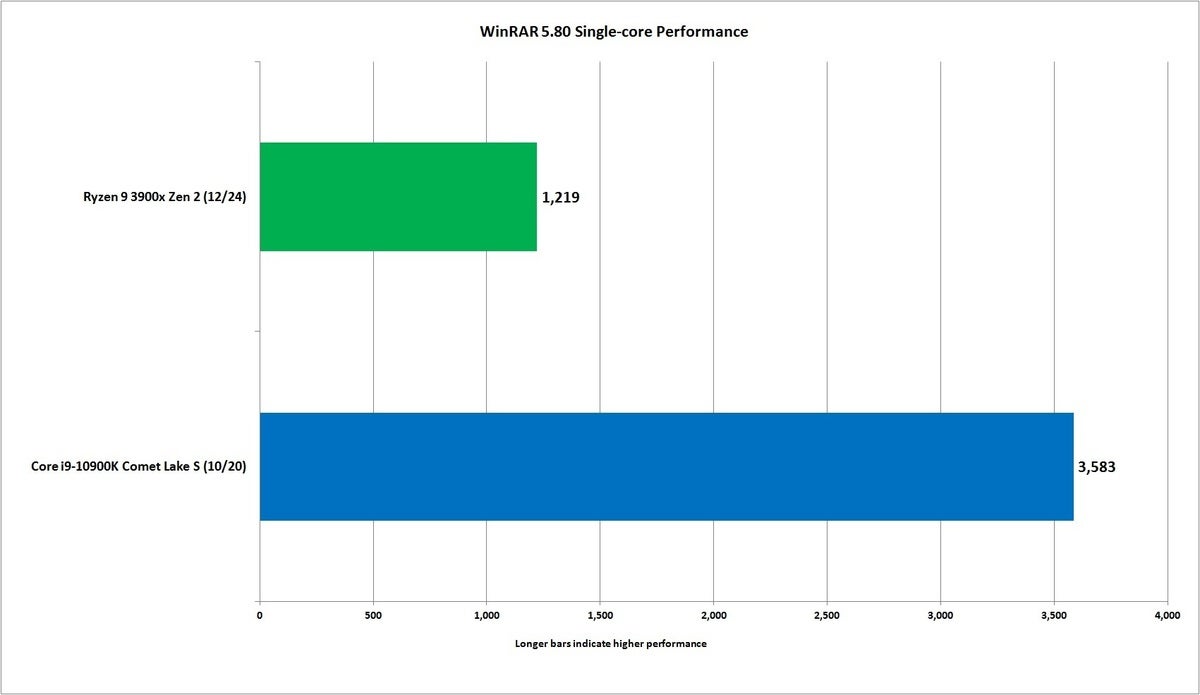

Switching WinRAR to single-core performance, nothing changes except the Core i9’s win grows to a 194-percent advantage. We use WinRAR because it’s worth pointing out that some software will heavily penalize Ryzen’s microarchitecture. Intel has fielded software support to companies for much longer than AMD, and it shows.

IDG

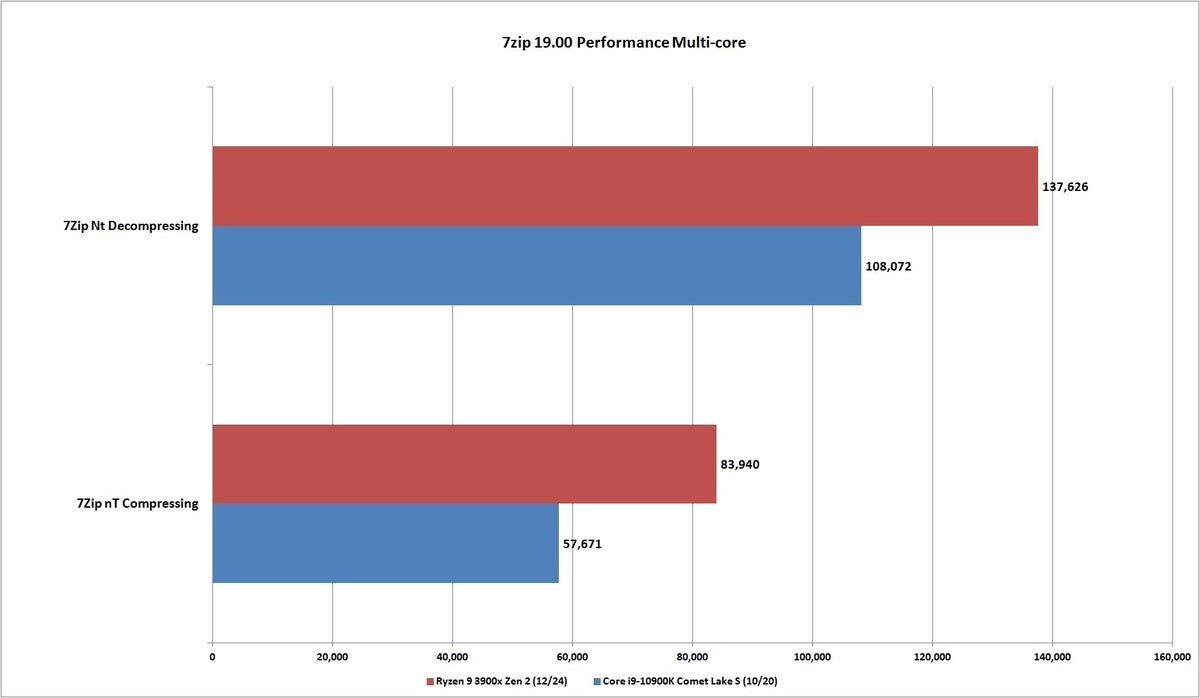

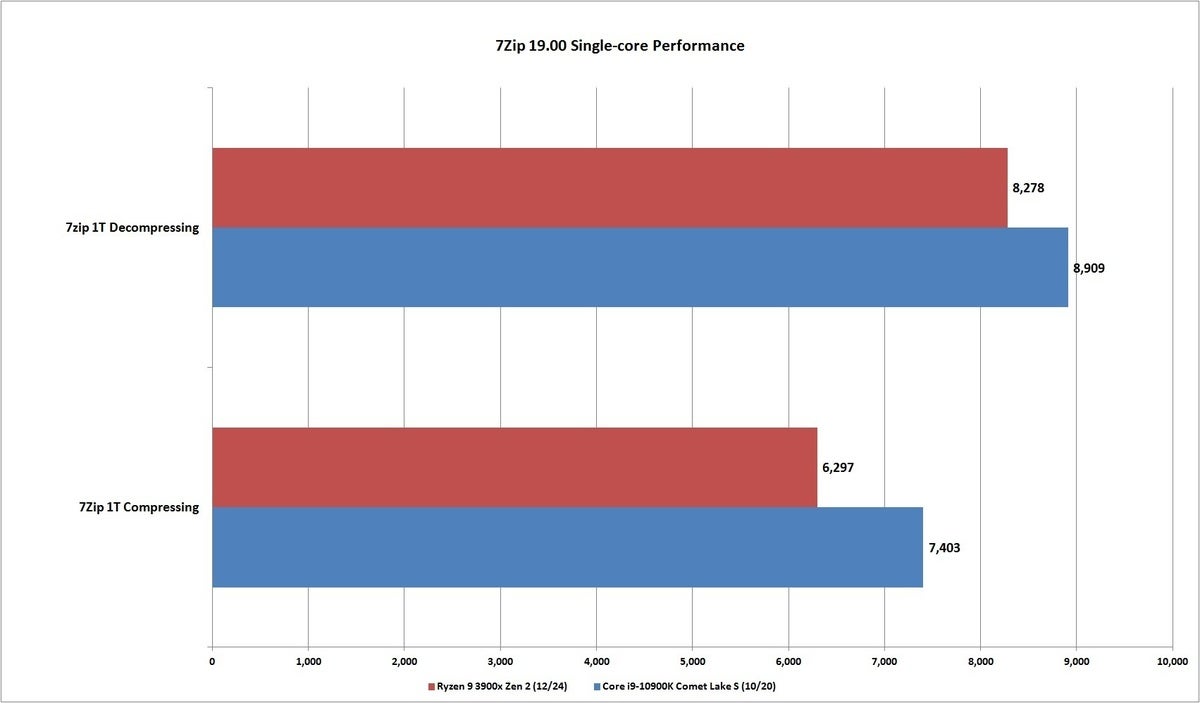

IDGThe gap closes with the much more popular (and free) 7Zip. We set the built-in benchmark to use the number of threads available to the CPU—in this case, 24 for Ryzen 9 and 20 for Core i9. The first result is multi-core.

IDG

IDGThe multi-core performance of the Core i9 can’t keep up with the Ryzen 9 in 7Zip.

Decompressing performance, according to the developer, leans heavily on integer performance, branch prediction, and instruction latency. Compressing performance leans more on memory latency, cache performance, and out-of-order performance. It doesn’t matter either way, as the Core i9 falls to the Ryzen 9 in both areas. The Ryzen 9 is about 21 percent faster in decompression, and a whopping 44 percent faster in compression.

Moving to single-threaded performance, we see the Core i9’s fortunes reverse, with about 7 percent faster decompression and 17 percent faster compression.

IDG

IDGSingle-threaded 7Zip performance is a solid win for the Core i9.

Core i9-10900K Gaming Performance

Intel didn’t call the Core i9-10900K ‘the best CPU for multi-threaded performance’ because it likely knew it wasn’t going to squeeze out the Ryzen 9 3900X. On the other hans, Intel’s chips have long led in gaming performance, ever since the first Ryzen was introduced.

Rather than have you scroll through 16 charts, we combined 16 results into one megachart, from a list of games run at varying resolutions and settings. We’ll run through it from top to bottom.

In Far Cry New Dawn we see the Core i9 vary from about 9 percent to about 14 percent over the Ryzen 9, depending on the resolution and game setting. As you jack up the resolution or the game setting, the test is increasingly GPU-bound.

Ashes of the Singularity: Escalation, long the poster child for DX12 performance, is hailed for actually using the extra CPU cores available to gamers today. For this run, we use the Crazy quality preset and select the CPU-focused benchmark, which is supposed to throw additional units into the game. The result: about a 7.5-percent advantage for the Core i9-10900K.

Chernobylite, an early-access game, features a benchmark to showcase its beautiful graphics. Set to high, where the game is not limited by GPU performance, we see that familiar 7.9-percent advantage for the Core i9 over the Ryzen 9. As we crank up the graphics from high to ultra, it becomes an increasingly GPU-bound test.

The only red (AMD) bar longer than a blue (Intel) bar is in Civilization VI Gathering Storms—but unfortunately for the Ryzen 9, this particular test measures how long it takes for the computer to make a move, and shorter time is better. The Core i9 is about 6.5 percent faster.