Ever since Apple announced a new system that would scan iCloud photos to battle against child abuse, there’s been some controversy among cryptographers worried the scanning system undermines privacy. Now, the German parliament has also spoken up against the new tech, reports 9to5Mac.

German parliament writes a letter to Tim Cook asking him to reconsider the CSAM scanning system

Apple’s new technology uses hashing to discover CSAM, or child sex abuse material, in a user’s iCloud Photos. If CSAM is discovered, the photo will be reviewed manually. The system will currently only be available in the US, but that doesn’t stop other countries’ governments to worry it can be used as a form of surveillance. Manuel Hoferlin, the Digital Agenda committee chairman of the German parliament has written a letter to Tim Cook, raising concerns with the new technology. He has stated that Apple is going on a ‘dangerous path’, undermining “safe and confidential communication.”

Hoferlin also adds the new system can be used as the largest surveillance instrument of history, and that would make Apple lose access to large markets whether the company decided to deploy the new system.

Previously, Apple has addressed the privacy-related concerns raised by cryptographers, stating it will refuse any government the right to use the CSAM scanning system, and that the tech will be used only to battle child abuse.

Cupertino has also stated the scan for child abuse material is not going to analyze any photos people have locally on their iPhones, but just the ones that have been uploaded to iCloud. Additionally, the company announced it will publish a Knowledge Base article with the root hash of the encrypted CSAM hash database, and users will be able to inspect it on their device.

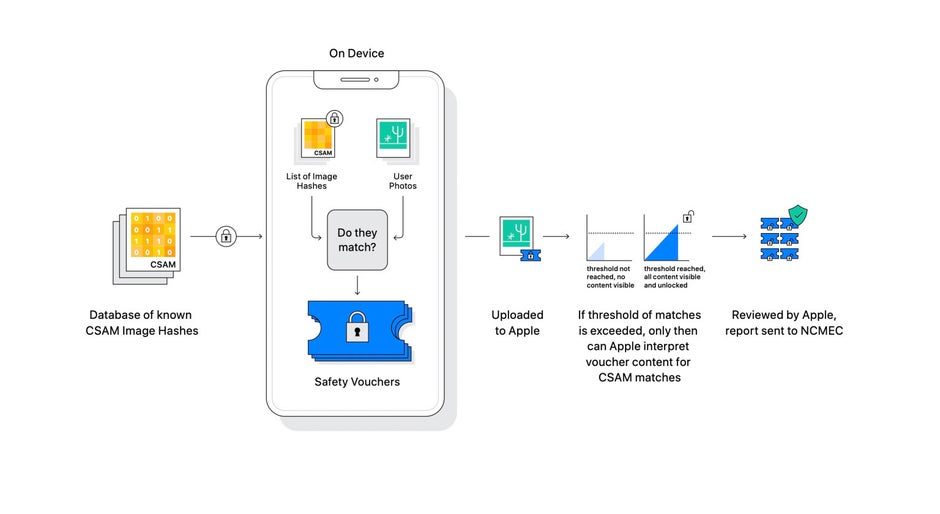

In this photo, you can see how the scanning system will work. The entire scan will be done locally on the iPhone:

Illustration detailing the CSAM system

In addition to that, Apple has earlier clarified that the CSAM detection feature will work only in iCloud Photos, and will not be scanning videos. And if a user doesn’t have photos stored in iCloud Photos, the CSAM scanning will not be running at all.

This basically means that if a user wants to opt out of CSAM scanning, all they need to do is disable iCloud Photos from their iPhone.

Apple has claimed it believes the new CSAM tech is way better and more private than what other companies have implemented on their servers, as the scanning will be completely done locally on the iPhone, and not on the server. Apple has also stated the implementations done by other companies require every single photo stored by a user on a server to be scanned, while the majority of them aren’t child abuse.

Earlier, cryptographers expressed concerns

Earlier, cryptography professionals have signed an open letter to Apple, detailing that despite the fact this system is meant to be used to combat child abuse, it could open a “backdoor” for other types of surveillance.

The letter states the new proposal and the possible backdoor it can introduce can undermine the fundamental privacy protections for all users of Apple products. An issue would be bypassing the end-to-end encryption and thus compromising users’ privacy.

Additionally, reportedly there have been some Apple employees that have also opposed or at least raised concerns with the introduction of the new technology, stating reasons similar to what cryptographers are saying in their open letter.

According to Apple, the system will work only with known child abuse materials, that would be hashed and compared by AI with photos stored on the iCloud. The company explicitly underlined that any government requests wishing to use the technology for something other than CSAM will be refused.