Have you ever struggled to find the right keywords for a Google search? Well, if you ever did, I know exactly how you feel. But Google’s got us covered. It is introducing a new feature to Google Lens aimed at helping you find stuff you can’t easily describe, and it is called Multisearch, reports 9to5Google. It is rolling out in beta to US users, and it will allow you to take a photo of something and ask a question about it.

Google Lens Multisearch beta coming to the States now, both on Android and iOS

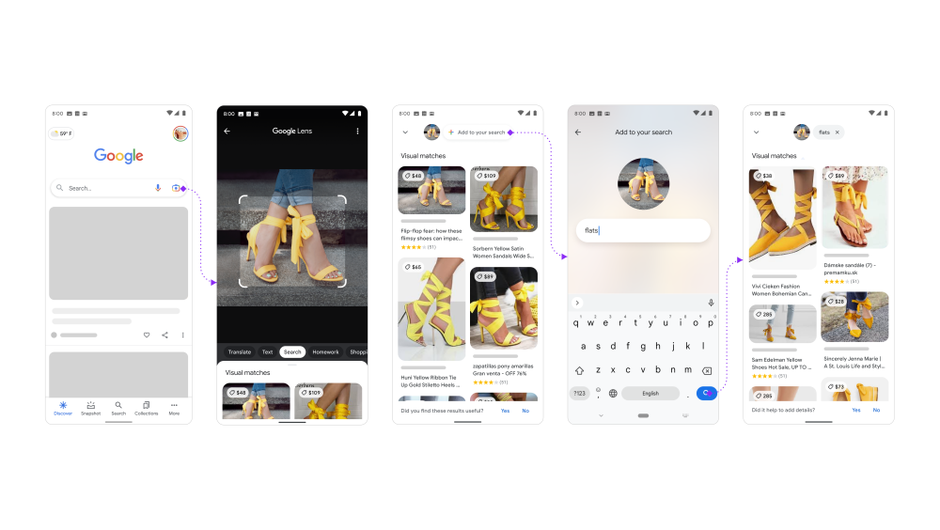

Back in September of last year, Google held its Search-focused keynote, and one of the coolest things the Mountain View tech giant announced during the event was a big upgrade to Google Lens. The Multisearch feature is now finally available for beta testers on Android and iOS. Pretty much, Multisearch is a completely new way to search in Google, and it is focused to help you with the things that you don’t have all the words to describe. Basically, you take a photo of the oh-so-undescribable thing (or you can even import an existing one) with Google Lens, and then you swipe up on the results panel and tap the new “Add to your search” button that’s located at the top.

The button will allow you to enter a question about the object, or refine your search by color, brand, or any other visual attribute. Yeah, but what does this mean exactly? Well, here are some examples.

You have taken a photo or a screenshot of a cool orange dress to give as a gift to your girl but you want to see if it is available in another color: simply add “green” to the search and the results should show you the dress in green (if it has a green option, of course).

Another example is of a photo with your dining set, with a query “coffee table”, and you can find a matching or similar table.

Or… imagine your mom told you to look after her plant when she’s on a trip. Yeah, we don’t want to kill it, but what if you don’t even know which plant it is? Simple: take a picture of it and add the query “care instructions”, and Google Lens will save you from embarrassment.

So far, the highlights are for fashion and home decor, and currently, the best results from Google Lens Multisearch are related to shopping searches, but we can easily see how this feature grows and becomes even more and more useful in the future. The example Google gave with a rosemary plant actually showcases that you don’t need to first perform a search to identify the plant and then a second one for care instructions: the two things are united.

AI, and more specifically, advancements in AI, is what is making Google Lens Multisearch possible. However, at the moment it cannot handle more complex queries, because it doesn’t use the Multitask Unified Model yet. In case you’re wondering what that is, the Multitask Unified Model or MUM was showcased during a demo. It allowed you to take a photo of broken bicycle gears and get instructions for repairing it.

The Multisearch feature is now rolling out officially in beta for the US, and it is accessible from the Google app on both Android and iOS devices.

The company’s search director Lou Wang stated that this is aimed at helping people understand questions more naturally. In the future, Wang stated that Multisearch will be expanding to videos, more images in general, and even “the kinds of answers you might find in a traditional Google text search.”(via TheVerge).