As you might have guessed from the title, I’ve spent the past month with a phone that uses eye-tracking for everyday interactions.

However, before we go any further, let’s quickly go through the history of eye tracking on phones, a technology as old as the Galaxy S4.

A brief (really brief) history of eye tracking

Eye-tracking technology dates all the way back to 1879, when Louis Émile Javal invented special eye-tracking spectacles to see how people read newspapers and books, but for the sake of keeping this on topic, we’ll focus on smartphones.

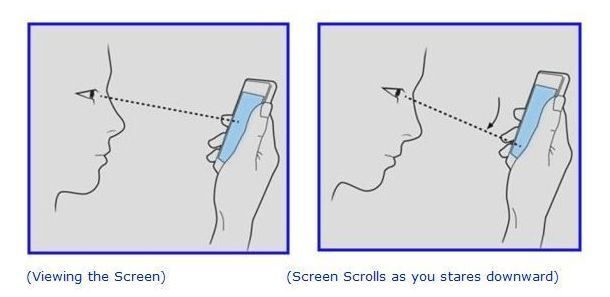

The Galaxy S4 had a simple eye-tracking system that could scroll the content on your phone’s screen depending on the tilt of your head and your gaze. Tilt down and look at the bottom of the screen, and the page starts to scroll upwards, and vice versa.

Many smartphone manufacturers explored the front-facing camera as a navigation tool, using gestures (Air Motion on the LG G8 ThinQ, Motion Sense on the Pixel 4), but one company recently decided to resurrect this technology and use it as a part of the core UI of the phone — Honor.

Honor Magic series assistive eye-gaze control

I stumbled upon this feature for the first time during a closed pre-brief of the Honor Magic 5 Pro. The technology was so impressive that it got me intrigued. Honor even made a little PR stunt last year, letting journalists control a car via the same eye tracking tech.

Would you trust your eyes to control your car? | Image by Honor

So how does it work? You first calibrate the system by gazing at points that pop up in different places on the screen.

Then, each time a notification is shown on the screen, you can look at it, and it will expand. If you hold your gaze over it for a bit more, the phone will open the corresponding app. It sounds simple, and it is, in fact, but it’s also pretty helpful.

The phone also had the ability to scroll pages, click on things, and go back and forward all by just using your eyes. While all these features are still in a prototype phase, one eye-tracking feature is already available: in Magic OS 9, you can control notifications with just your eyes.

How useful is it, really?

There are specific use cases where this tech is pretty useful. Let’s say you’re driving, and your phone is on a car mount for navigation. When a notification pops up, you can just quickly glance over it without taking your hands off the wheel, and it will expand to show you if it’s anything important.

I admit that these are pretty niche applications, but I can’t wait to get the full functionality where you can scroll, blink to open and close apps, go back, and generally control more things without touching your phone. The eye-tracking accuracy is already very good, even with the phone a bit further away from you, so it’s quite impressive already.

Your iPhone and Android phone have eye tracking too

The tech-savvy among you are probably typing comments about how eye tracking is already inside every phone out there. That’s true to some extent. Eye tracking was introduced as an accessibility option with the iPhone XS, and you can find it on every iPhone ever since.

How to enable and use eye tracking on your iPhone

- Go to Settings > Accessibility > Eye Tracking, then turn on Eye Tracking.

- Follow the onscreen instructions to calibrate Eye Tracking. As a dot appears in different locations around the screen, follow it with your eyes.

- After you turn on and calibrate Eye Tracking, an onscreen pointer follows the movement of your eyes. When you’re looking at an item on the screen, an outline appears around the item.

- When you hold your gaze steady at a location on the screen, the dwell pointer appears where you’re looking, and the dwell timer begins (the dwell pointer circle starts to fill). When the dwell timer finishes, an action—tap, by default—is performed.

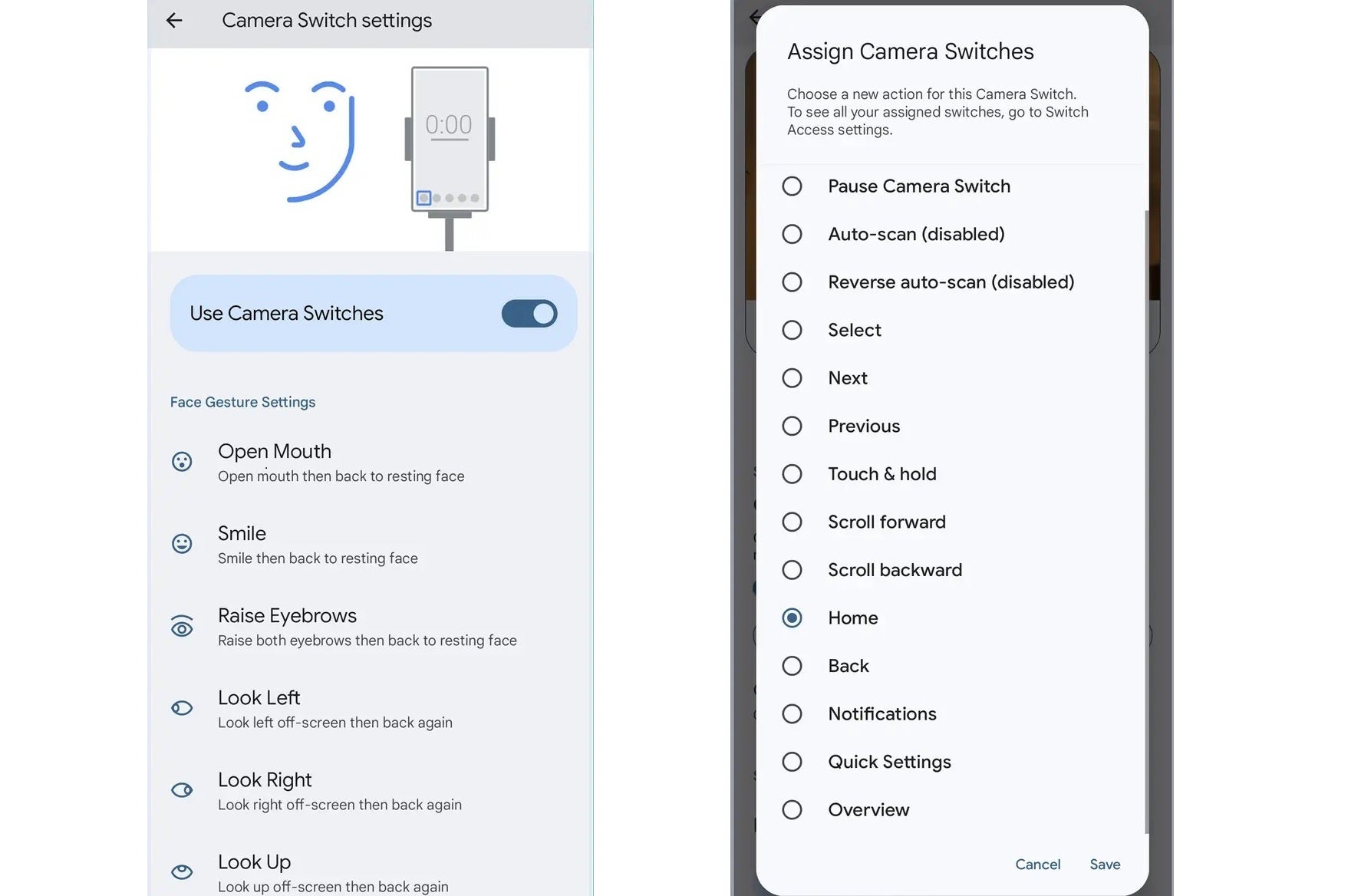

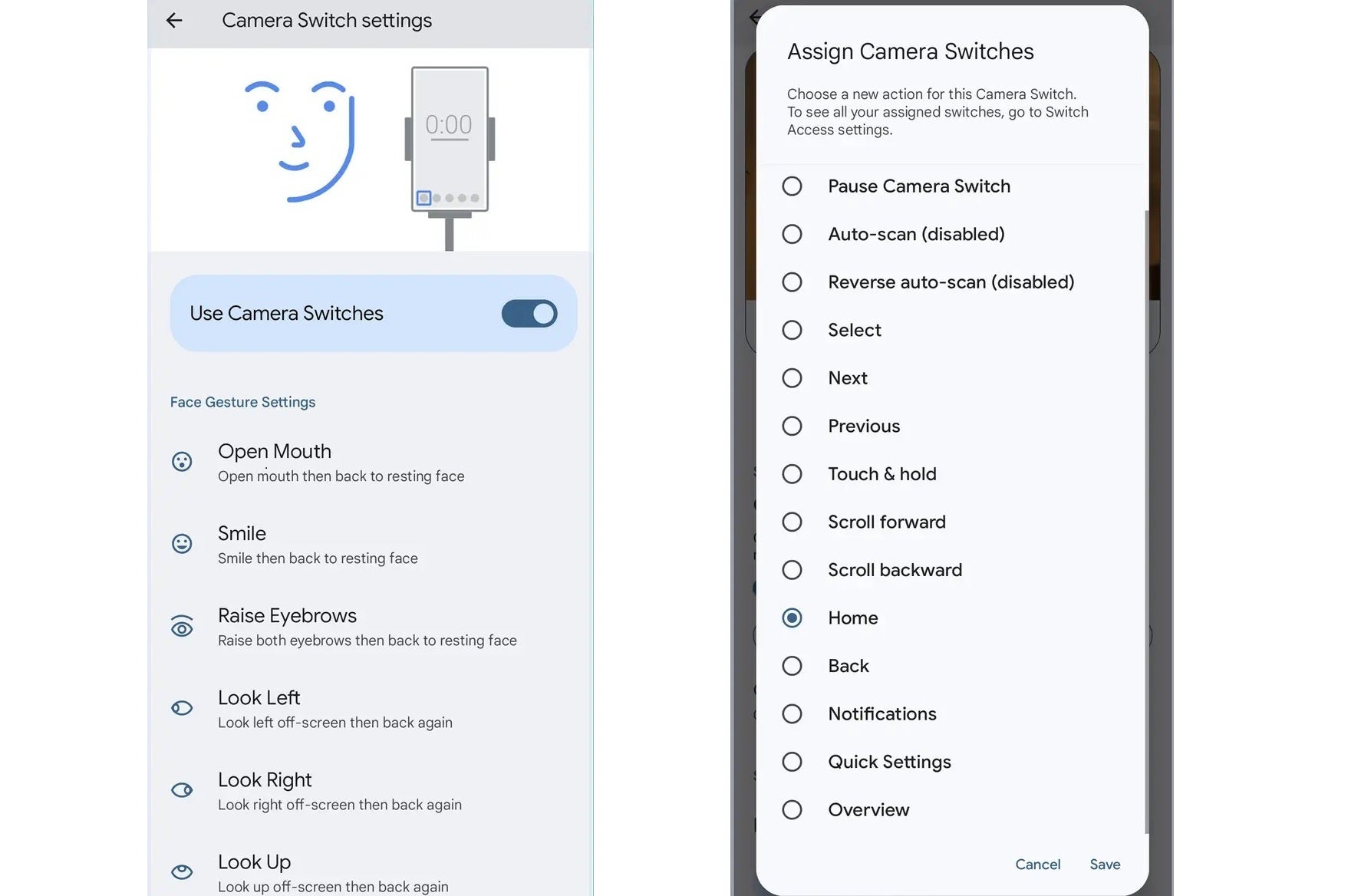

How to enable and use eye tracking on your Android phone

- Go to Settings > Accessibility > Switch Access (on Samsung phones, you need to install this) > Use Switch Access

- Follow the prompt to grant the system the permissions it needs to operate.

- Tap “Settings” and then “Camera Switch Settings” and flip the toggle next to “Use Camera Switches”

- You can now use gestures such as “open mouth,” “raise eyebrows,” “look left,” etc.

The tricky part here is that you need to activate these accessibility settings every time, either by swiping with two fingers from the bottom of the screen or by holding the volume buttons for a certain amount of time. This kind of defeats the purpose of controlling your phone without touching it, but for people with disabilities (whom this feature is actually designed for), the additional action is not that cumbersome.

One key difference

This eye-tracking navigation is buried deep inside the accessibility settings on both iPhone and Android devices. Honor, however, has a different approach as it has decided to give eye tracking the center stage in its Magic OS.

It might be just me, but I think that using your eyes to control things is a logical evolution of the touch interface. If done right, it can save a lot of time and keep smudges off your screen as well. Not to mention the cool factor of making a gadget do things WITH YOUR GAZE. Obey me, tiny silicon slave!