Google will soon be using Multitask Unified Model (MUM) to make Search easier and more intuitive

You could type “white floral Victorian socks” on Search, but it doesn’t include the pattern you saw on the shirt. Google says, “By combining images and text into a single query, we’re making it easier to search visually and express your questions in more natural ways.”

The search giant says that it has discovered that a complex search like this on average takes eight different searches to get all of the needed answers. But MUM both understands and generates languages according to Google. It is trained to handle multiple tasks at one time and can work with 75 different languages. Being multimodal, it can understand the information presented in text and images.

MUM will help users find “more web pages, videos, images, and ideas”

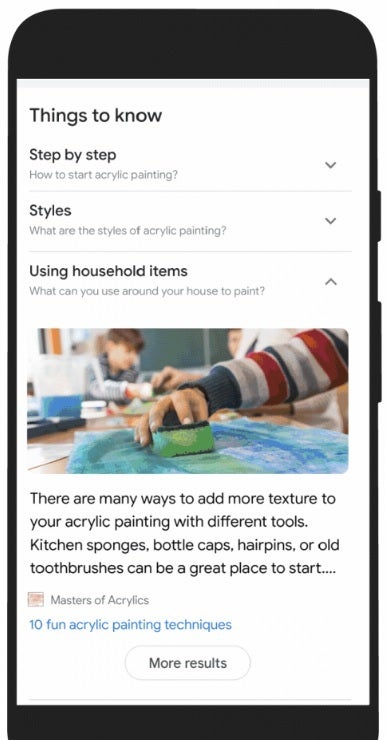

There are more than 350 different topics related to acrylic painting and Google says that using MUM will get users on the right path and even give them ideas to search for that they might not have considered. That would include looking up a topic such as “how to make acrylic paintings with household items.” This returns content that could be extremely helpful to the person redecorating his/her home.

Google is also making it easier for users to zoom in and out of a topic to help them “refine and broaden searches.” And with videos, Google Search results will use MUM to show videos containing content relating to your search even if this content or topic isn’t mentioned explicitly in the video. Search can do this thanks to its advanced understanding of the information in the video.

The company says that “Across all these MUM experiences, we look forward to helping people discover more web pages, videos, images and ideas that they may not have come across or otherwise searched for.” But there is more. Google is helping users decide whether the information they receive from the company is credible and will also make it easier for users to shop from merchants both big and small.