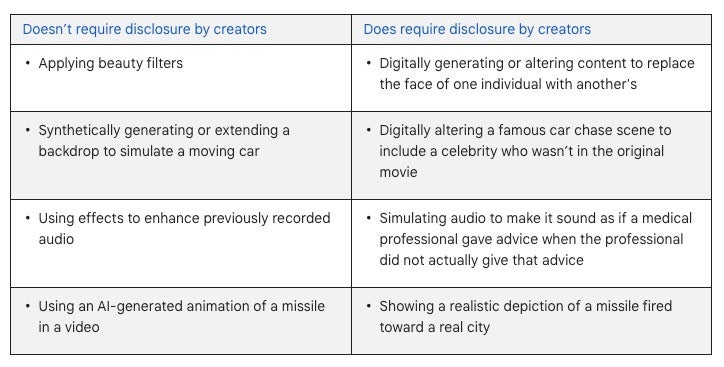

In a community post today, YouTube announced its new guidelines and requirements for creators pertaining to uploading AI-generated content on the platform. When uploading content, creators will now be prompted to indicate if their videos contain “meaningfully altered or synthetically generated” elements that could be mistaken for genuine footage. This disclosure won’t be necessary for simple edits, special effects, or obviously unrealistic AI creations.

Examples of altered or synthetic content | Source: YouTube Help

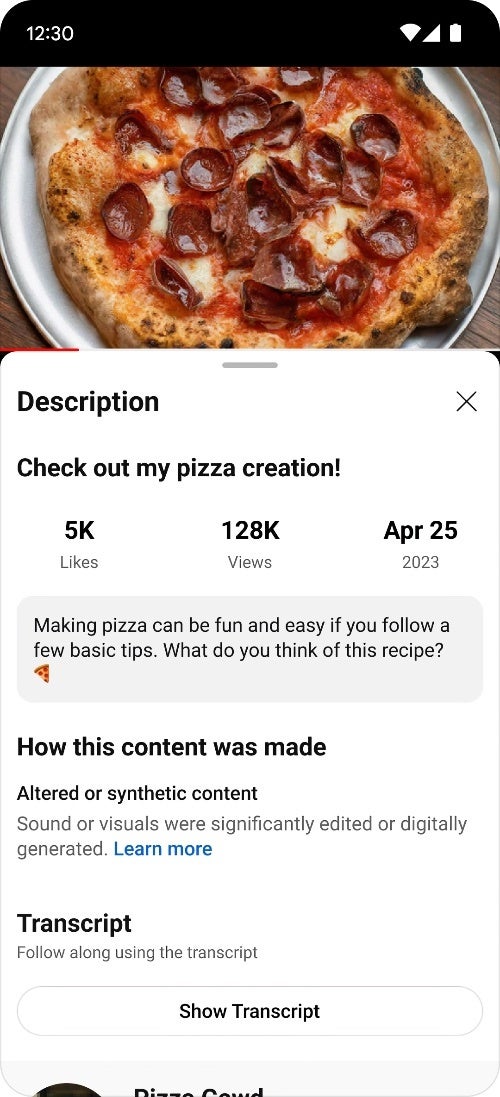

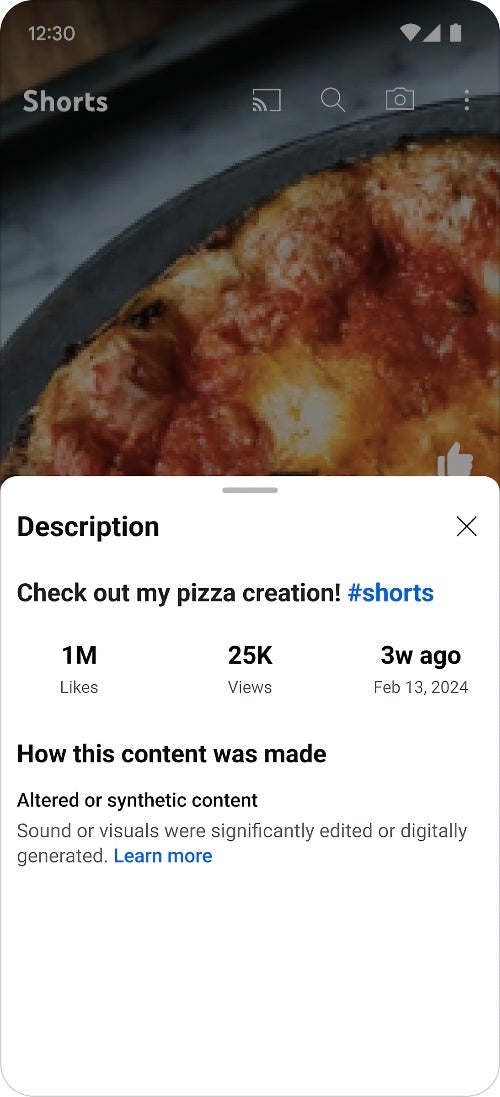

YouTube will display labels to inform viewers about AI-generated content. Most of the time, these labels will appear in the video’s expanded description. For videos discussing sensitive subjects like health, politics, or finance, the label will be placed prominently on the video itself.

AI-generated content labels on YouTube

In some cases where creators don’t disclose, YouTube may apply labels to videos, especially when they involve sensitive topics. While there are no immediate penalties for non-disclosure, YouTube plans to implement them in the future, potentially including content removal or suspension from the YouTube Partner Program.

The new labeling system is launching first for mobile viewers and will gradually expand to desktop and TV. The disclosure option for creators will first be accessible on desktop, then on mobile. Furthermore, YouTube is seeking feedback to refine this process as it evolves.